Lasso Regression clearly explained

Before talking about Lasso Regression, let us do a quick recap of Ridge Regression. In Ridge Regression, we minimize

SSE+λ∗(slope)^2

where SSE = Sum of squares of residuals or errors, λ = severity of the penalty, slope = y-intercept

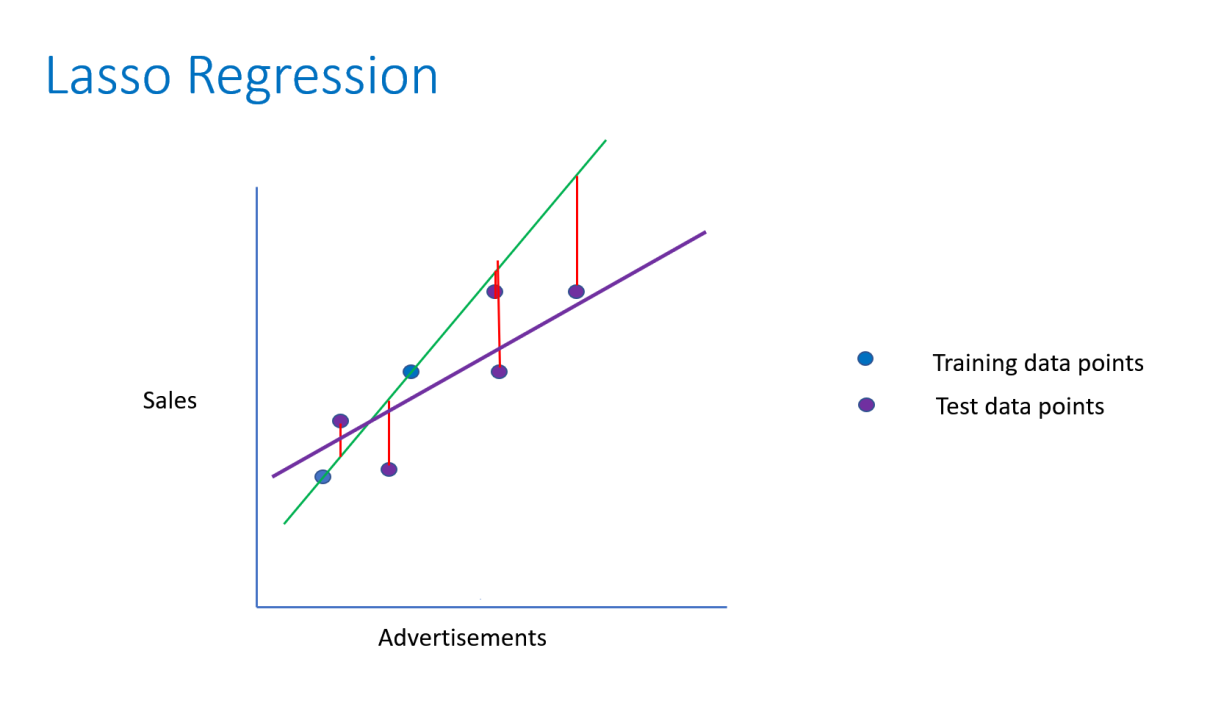

We know that the basic idea behind Ridge Regression is to get a line that will fit the testing data well at the cost of some increased error in the training data set. That means by bringing in some bias we are getting a huge drop in the variance.

When λ = 0, Ridge Regression line = SSE+λ∗(slope)^2 = SSE = Least-squares line As λ increases, Ridge Regression slope decreases. When λ becomes very large, the Ridge Regression slope will shrink to a great extent but it will never be equal to zero.

Now, coming to Lasso Regression. There is a lot of similarity between Ridge Regression and Lasso Regression. Both help us to overcome the problem of overfitting. But unlike Ridge Regression, where we minimize SSE+λ∗(slope)^2, in Lasso Regression, we minimize SSE+λ∗ abs(slope), where abs(slope) denotes the absolute value of the slope.

In other words, in Ridge Regression we take the square of the slope whereas, in Lasso Regression, we take the absolute value of the slope.

Why taking the absolute value of the slope so important?

When λ = 0, Lasso Regression line = Least-squares line As the value of λ increases, the slope gets smaller. If we take λ to be very large, the slope will exactly become equal to 0 which is never possible in Ridge Regression.

So, if we consider a Sales example, we can write

Sales = β0+slope∗(Advertisement)+β1∗(Price of the Product)+β2∗(Size of the Shop)

and the list can go on.

Now using some common sense we can say that not all the independent variables are important in predicting Sales.

Let us say, here the Size of the Shop is not an important feature in predicting the Sales of the product as all the shops are of the same size in that location.

So, if we run Ridge Regression, as λ increases to a large extent, slope and β1will shrink to a small extent (as the features Advertisement and Price of the Product are important in predicting Sales) whereas β2 will shrink to a large extent (as Size of the Shop is not an important feature in predicting Sales) but β2 will never be equal to zero.

On the other hand, if we use Lasso Regression, then as λ increases to a large extent, slope and β1 will shrink to a small extent but β2 will exactly become equal to 0.

Finally, we can say that Lasso Regression helps us in Dimension Reduction i.e features that are not important in predicting the dependent variable will be removed from the model. Hence Lasso Regression is far more superior to Ridge Regression because it helps us in overcoming overfitting as well as in Dimension Reduction. But when most of the variables in the model are useful, we can use Ridge Regression.